The sudden democratization of Generative Artificial Intelligence (genAI) tools, especially those backed by Large Language Models (LLMs), has enflamed passions and questioned our fundamental understanding of intelligence, consciousness, and reasoning. For some, LLMs are the latest expression of age-old questions. Can machines think? Can machines become sentient? What is consciousness? From philosophers to Star Trek fans, many have asked those questions. For others, it is an excellent source of click-bait titles:

- This AI says it has feelings. It’s wrong. Right?

- Google Engineer claims AI Chatbot is sentient: Why That Matters

- A conversation with Bing’s Chatbot left me deeply unsettled

In this article, I would like to demystify what is Al, and specifically LLMs, and explain why they do not “think” in the way you might be - indeed - thinking.

Intelligence

To talk about artificial intelligence, I must first talk about human intelligence. Our story started presumably 200,000 to 300,000 years ago, with the emergence of the Homo Sapiens species. In Latin, homo sapiens means “intelligent human”. Today, modern Humans are grouped into a subspecies of Homo Sapiens Sapiens, which can be interpreted as “human who thinks about how it thinks”. Scientists have longed believed that one major aspect of our cognitive mark-up is the ability to have metacognition, to think about how we think. This ability to think about how we think, and therefore the ability to change how we think, is the keystone of modern humans’ ability to innovate and invent.

Logic

With the invention of the computer program (see Ada Lovelace), we stepped from the world of mechanical computing (i.e., the abacus) to the world of logical systems. A logical system is a way to encode a reasoning without encumbering itself with the details. It abstracts details away to form rules that can be applied in many different settings to obtain a conclusion.

For instance, we could create a logical system to count how many objects are in my backpack. We don’t need to define every type of object. “Object” is therefore an abstraction of wallet, keys, phone, etc. (If you are a software engineer, things are starting to look familiar). Computers are the physical expression of logical systems, thus computers are machines that, given the proper logical system, can logically reason.

Reason

Yes, computers can logically reason, but computers do not have Reason - Note the capitalization. “Reason is the capacity of applying logic consciously by drawing conclusions from new or existing information, with the aim of seeking the truth.” Logical reasoning by following a set of instructions is not Reason. A computer does not think, it computes (see Automated reasoning and First-order logic). We are telling the computer how to reason.

More importantly, I am in strong agreement with Mark H. Bickard and Robert L. Campbell, who argued that logical systems “can’t construct new logical systems more powerful than themselves”, so reasoning and rationality must involve more than a system of logic. (see Reason compared to Logic). This means that a computer cannot - by itself start solving harder problems than the ones its first system could solve. Humans, on the other hand, have metacognition. This is how we can create logical systems that are more powerful than the previous ones. This is where innovation and invention come from. “But what about data!”, you cheekily ask! 😛 I’ll get to it in a moment… (if you can’t wait, read this).

Computation

If you are still with me at this point, let’s go even deeper! If computers can compute following a logical system, then we must strive to create more powerful logical systems for computers to compute! The greater the system, the harder the problem it can solve! In the XXth century, mathematicians became very interested in understanding how to measure the ability of a logical system to answer questions, and also, how long it would take to compute. Welcome to Theory of computation! You very likely already know the most famous concept of theory of computation: The Turing machine!

AI was invented the day computers were invented, because understanding computation is understanding the power of logical systems, which are in essence intelligent. AI and computation are so intertwined that it is also Alan Turing who proposed the Turing test. The Turing Test is an experiment where a human is asked to differentiate between a human and an Al based on their outputs. Where have you heard that before? Google’s AI passed a famous test — and showed how the test is broken. With the Turing test, Alan Turing gave us the definition of an AI:

AI is a computer-made decision, which by its “smartness” (computation) is indistinguishable from a human-made decision.

On a side note, theory of computation is a very profound and philosophical topic. It gave us the compiler, problem reduction, and so many beautiful ways of understanding the world.

Data

At the time of Alan Turing, computers were all deterministic systems: given an input, a computer would always give the same output. And human computers were more powerful, reliable, and trusted than non-human computers (Katherine Johnson). As computer engineering improved with hard drive, flash memory, CPUs computers were made to handle larger and larger amount of data.

At first, AI algorithms were purely analytical, meaning that given a problem, the solution was a mathematical equation that provided an exact and deterministic answer every time. Even with data, algorithms were deterministic, like the Linear regression algorithm. As data and processing power grew, it became harder to compute analytical solutions (e.g., Linear programming). Thus, we needed to start approximating things, and use statistics to derive conclusions. Things became stochastic, that is, dependent on probabilities. A stochastic system may produce a different output every time, hence the probabilities.

Machine Learning (ML) emerged as subfield of AI. It focuses on grouping things, whether we already know what groups exist (supervised ML) or not (unsupervised ML). For instance, ML can calculate a customer’s probability to renew (Which group does this customer belong? Renew or Churn?), or wether an image contains a cat or a dog. This grouping is done using various statistical processes, which produce a stochastic answer (i.e., it may change depending on your data). Every “truth” derived by an ML comes from data + its statistical processing. And that, includes LLMs as well. Thus, the quality of your data will strongly define the quality of the system’s conclusions.

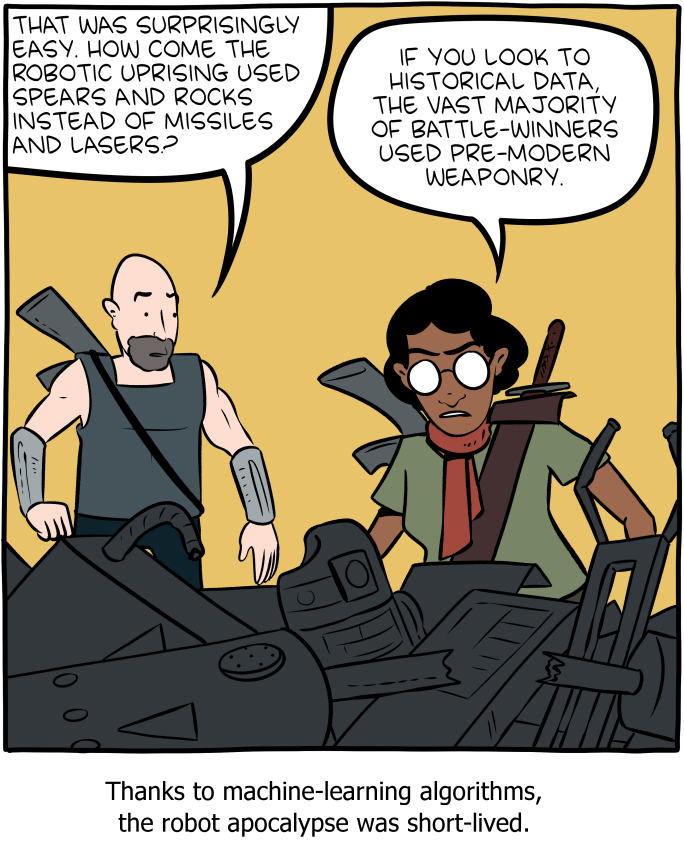

Mandatory SMBC reference:

A philosophical note. We are experiencing the real-life debate between empiricists (Knowledge comes from data) and rationalists (Knowledge comes from reason), and it’s ok. We need both.

Can an Al tell us something that we didn’t know before?

No, because logical systems “can’t construct new logical systems more powerful than themselves."

Even with data?

The field of Epistemology is the study of how we know what we know. Logical systems are excellent at proving what they know. If an algorithm generates a new discovery, it is mathematically only possible if the discovery was contained in the data. An excellent example of this is the application of Machine learning in physics (see Scientific Machine Learning). Those cases do not violate the epistemological assumption that knowledge comes from somewhere. The same way a microscope doesn’t know something we don’t, algorithms only accelerated the emergence of evidence, they do not invent it.

Deep Learning

Alright. ☕ Let’s recap. ☕

Computers use logical reasoning to compute things. We can create special types of logical reasoning, called AI, to perform specific tasks in a very smart way. Data and statistical processing really help achieve a wider type of tasks, faster and better. Machine Learning (ML) is an example of such tasks.

Deep learning is a subfield within ML, that use a specific logical system called Neural Network (Think of it as a specific mathematical equation), with an approximation (see Rectifier) to make it easier to compute, with the help of a TON of data.

On a side note, Neural Networks were invented in the 1960s (Perceptron) but became useful only recently, when we could make them very large due to GPU improvement, and use a lot of data due to storage improvement.

But something was missing. Data and computational power by themselves are not sufficient to derive very complicated conclusions. Data is not knowledge, it’s information. How do we represent data such that it forms knowledge? w Knowledge representation and reasoning has been a field of study in Al since the beginning, but mathematically, it was very difficult. We tried Knowledge graph and other crazy stuff, until..

Until the Transformers 🚗🤖 and Encoders! I am oversimplifying, but in essence, we developed approximations that allowed to immensely increase the amount of parameters a model could compute AND simultaneously, told the model what was interesting about the data.

Language

Also, it turns out that language is very logical… given enough data, you can easily approximate stochastically what comes next in a sentence. DUN DUN DUNNN…

Now, let’s put it all together.

Large Language Models

Large Language Models (LLMs) are a specific type of Deep Learning algorithm, whose logical system uses statistical approximation and VAST amount of data to simulate language.

An LLM does not think. An LLM is a stochastic parrot 🦜.

Still don’t believe me? Watch this!

An LLM computes - in a very mathematically impressive way - the statistically best response to a prompt and the context given. LLMs do not think like you and I would colloquially say “I think”.

AI does NOT think. Yet.

Conclusion

There might be a day, where computers armed with powerful algorithms and data, can achieve general cognition at similar levels than humans. Given any new problem, computers could create a new logical system to solve it. But this day has not come yet. And the people claiming that we are close to this day have a strong financial interest in making you believe the hype. (Open AI - Planning for AGI and beyond ).

In conclusion, AI is great at automating many tasks, but thinking is not one of them!