Artificial Intelligence (AI) is now ubiquitous in our daily life, and we have become - willingly or unwillingly - guinea pigs 🐹. In the future, the AIpocalypse may yet be an action-packed fight against the Terminator, but for now, it is something closer to a boring dystopia à la 1984.

🤖 💀

In this article, I introduce the notions of AI risk, AI harm, and AI threat, and present recent examples of harmful AI. I also point at a few resources to keep you informed and safe 👀.

The Risk, The Harm, and the ugly Threat

AI Risk

An AI risk is everything that can go wrong - knowingly or unknowingly - about or because of a model, at any point in the designing/training/production pipeline of an AI system. IBM has heavily invested in AI Governance, and created this wonderfully terrifying AI Risk Atlas. This is similar to the Mitre CVE classification, which catalogs cybersecurity vulnerabilities. Cataloging risks is a crucial step in alleviating and mitigating risks. “Know Thy Enemy”.

AI Harm

An AI harm is an harmful or near harmful consequence of the deployment and usage of an AI system. The collection and cataloging of harms is a well-established (and legally required) practice in aviation all around the world.

A common saying in aviation: Regulations are written in blood. ✈️ 🩸

Fortunately, lack of AI regulations has not stopped open-source efforts from coalescing. For instance, the AI incident Database is actively cataloging incidents, but their reach is limited to what is publicly published. Looking back on what the history of the aviation industry taught us, UL Digital Safety Research Institute’s Director, Dr. Sean McGregor is advocating for more open digital safety standards:

Failing to share insights with competitors for how to save lives is a moral failing and not possible in modern aviation.

Cybersecurity has CVE, aviation has agencies like the american FAA or the French BEA. We need the same for AI before too much blood is spilled.

AI Threat

An AI threat is a threat caused by the malicious use of AI. AI threats have exponentially exploded with generativeAI, by lowering the barrier of access, and the cost of cyber attacks. OWASP, a leading open-source cybersecurity community which - among other things -focuses on identifying top threats, has identified 10 possible threats for LLMs models. Gone are the days where you could spot phishing by looking for typos and weird English. Now, attackers are using LLM-backed ChatBots to phish and spear phish. Furthermore, Deep Fakes pose a real danger to consumers, citizens, and public institutions.

The Boring AI Dystopia

Unfair Justice System

The now famous ProPublica study on Machine Bias is one of the most notable work on harmful AI of recent years and should be required reading for any data-related course, as it clearly demonstrates that an AI is only as impartial as its data. It describes how the justice system uses data and algorithms to calculate recidivism risk, and determine sentences. In the USA, institutional racism defines the ensemble of laws, systems, practices, policies, etc. that perpetrates and perpetuates discrimination toward a minoritized group. Thus, the data collected from this system will “have” institutional racism in it.

An algorithm can never be “better” than the data you feed it, it cannot transcend it. I wrote an in-depth explanation of how AI “think” over here.

AI perpetuates the worst in our society, automatically, at scale.

Automatic Dystopia

The problem does not stop with crime statistics. Facial recognition is being extensively used by the US State Department, culminating to a database of 117 millions Americans as of 2016. I recently went through passport control without having to show my passport. This was both amazing and terrifying. Why terrifying? Because we are all very likely to have doppelgangers, and some of them will cause you trouble. The invasion of privacy is a legitimate concerns, especially in countries that have laws regarding undue search.

Algorithm Addiction

Social media algorithms, which control your social media feed, are designed to increase attention and retention. Intended or not, those algorithms causes addictions. Compulsion, addiction, whatever you want to call it, the science is narrowing down on the negative effects to children, teens, and adults.

Propaganda & Misinformation

This article is written in 2024, an election year in the United States. These will be interesting times. On one hand, we have social media algorithms addicting your attention, and perpetuating (or even favoring) misinformation. On the other, we have easy access to unregulated tools that are very good at producing propaganda, automatically, at scale. Researchers have raised alarms about this dangerous cocktail, but public action has been limited.

The environmental cost of this blog’s banner

During the 2021-2022 winter, violent demonstrations shook Kazakhstan. These demonstrations were catalyzed by the skyrocketing electricity prices. These prices were driven up by crypto-miners. You read that right. People mining Bitcoin and other crypto currencies, used data centers in Kazakhstan that used so much electricity it caused a near-revolution.

Now, consider that training a GPT model requires an estimated 10 Gigawatt-hours (10 millions kilowatt-hours), and that ChatGPT’s daily usage is also estimated at 1 Gigawatt-hours. The average US household consumes 0.010 gigawatt-hours per year.

In addition of the societal risks imposed on poorer countries with unregulated electricity grids, the carbon-cost of generative AI is indecent. How can we justify this, in a century where temperatures are expected to rise 2 to 4 degree Celsius and displace 3 *billion people.

I really like this article -> Is it worth it?.

AI technology has contribution to make, especially in fighting climate change. This is not unsolvable. Innovations on reducing computational needs pre-date generative AI, and regulations would help.

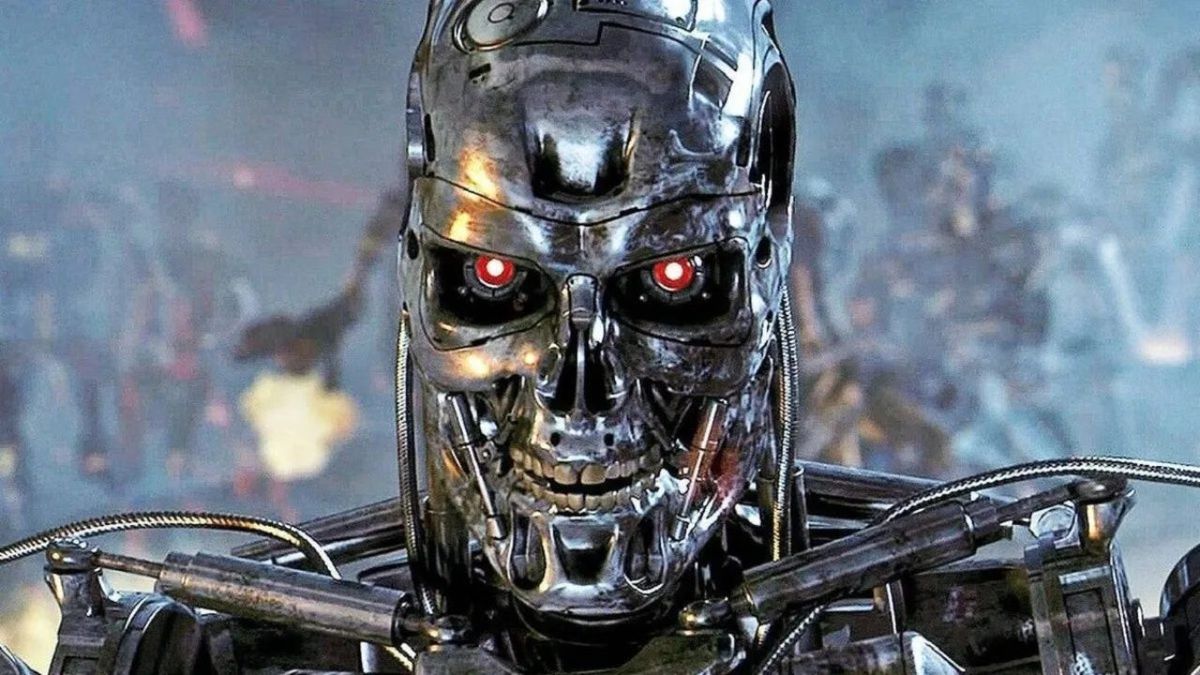

Terminator

In 2017, I attended the inaugural speech of Dr. Dietterich as President of the Association for the Advancement of Artificial Intelligence (AAAI). AAAI is one of the main scientific society to research, develop, and promote AI. In his speech, Dr. Dietterich said:

The final high-stakes application that I wish to discuss is autonomous weaponry. Unlike all of the other applications I’ve discussed, offensive autonomous weapons are designed to inflict damage and kill people. These systems have the potential to transform military tactics by greatly speeding up the tempo of the battlefield. […] My view is that until we can provide strong robustness guarantees for the combined human-robot system, we should not deploy autonomous weapons systems on the battlefield. I believe that a treaty banning such weapons would be a safer course for humanity to follow.

Weaponized AI exists, and is well on its way to be integrated in our daily lives. It will seem innocuous at first, but it is advancing even if “hypothetically”.

🤖 💀